It’s time to set up a Nginx frontend for our services hosted on Docker. But first, we will configure Certbot to retrieve SSL certificates.

Certbot

Docker image creation for Raspberry Pi

Certbot is the official client from Let’s Encrypt to request SSL certificates. We will use the ovh-dns plugin to obtain certificates because it’s the easiest and the most flexible way (we don’t have to open any port for an HTTP challenge, we can request a certificate for any of our domain or subdomain and even a wildcard certificate). As usual, we will use a Docker container.

The official Certbot Docker image doesn’t support ARM architecture yet so we will build our image based on the official Dockerfiles.

$ mkdir /opt/docker/certbot && cd /opt/docker/certbot

-- Build the core image

$ mkdir core && cd core

$ wget https://raw.githubusercontent.com/certbot-docker/certbot-docker/master/core/Dockerfile

-- Certbot has preliminary support for cross-platform compilation but we will not use it when building on the Raspberry Pi

$ touch qemu--static

$ docker build \

--build-arg TARGET_ARCH=arm32v6 \

--build-arg CERTBOT_VERSION=0.39.0 \

--tag certbot/certbot:arm32v6-v0.39.0 .

$ cd /opt/docker/certbot

-- Build the dns-ovh image

$ mkdir plugin && cd plugin

$ wget https://raw.githubusercontent.com/certbot-docker/certbot-docker/master/plugin/Dockerfile

$ touch qemu--static

$ docker build \

--build-arg TARGET_ARCH=arm32v6 \

--build-arg CERTBOT_VERSION=0.39.0 \

--build-arg PLUGIN_NAME=dns-ovh \

--tag certbot/certbot:local-dns-ovh-arm32v6-0.39.0 .

Dns-OVH plugin configuration

To use the dns-ovh plugin, we need to create a file containing the OVH API Credentials (OVH Europe / OVH North America).

The required permissions are:

- GET /domain/zone/

- GET /domain/zone/

[DOMAIN]/status - GET /domain/zone/

[DOMAIN]/record - GET /domain/zone/

[DOMAIN]/record/* - POST /domain/zone/

[DOMAIN]/record - POST /domain/zone/

[DOMAIN]/refresh - DELETE /domain/zone/

[DOMAIN]/record/*

Once the credentials are generated, we can fill a file with all the details

$ export DNS_OVH_ENDPOINT=ovh-eu

$ export DNS_OVH_APP_KEY=KEY

$ export DNS_OVH_APP_SECRET=SECRET

$ export DNS_OVH_CONSUMER_KEY=CONSUMER_KEY

$ cd /opt/docker/certbot/

$ tee ovh_credentials > /dev/null << EOF

dns_ovh_endpoint = ${DNS_OVH_ENDPOINT}

dns_ovh_application_key = ${DNS_OVH_APP_KEY}

dns_ovh_application_secret = ${DNS_OVH_APP_SECRET}

dns_ovh_consumer_key = ${DNS_OVH_CONSUMER_KEY}

EOF

$ chmod 600 ovh_credentials

Obtain certificates

Finally, we set up an alias to call certbot easily

$ tee -a ~/.bash_aliases > /dev/null << EOF

function certbot() {

docker run --rm \\

-v ${PWD}/certs:/etc/letsencrypt \\

-v ${PWD}/logs:/var/log/letsencrypt \\

-v ${PWD}/ovh_credentials:/etc/ovh_credentials \\

certbot/certbot:local-dns-ovh-arm32v6-0.39.0 \\

\$1 \\

--dns-ovh --dns-ovh-credentials /etc/ovh_credentials \\

\${@:2}

}

EOF

$ source ~/.bash_aliases

Now, we can call certbot by invoking the command certbot certonly -d pisin.example.org.

If we want to request a certificate with multiple domains or a wildcard, we can invoke certbot with a command like certbot certonly -d example.org -d pisin.example.org -d *.dev.example.org. As you may notice, you can obtain a certificate even if there is no existing entry in the DNS records.

Once a certificate is retrieved, we can check its content with the command sudo openssl x509 -noout -text -in /opt/docker/certbot/certs/live/pisin.example.org/cert.pem.

Renew certificates

The certificates provided by Let’s Encrypt are valid for 90 days so we need to renew them regularly. We simply use a cron task to automatically renew the certificates if needed. The following command will check all installed certificates and will renew the certificates expiring in less than 30 days.

4 4 */15 * * bash -c "source ~/.bash_aliases && certbot renew && docker-compose -f /opt/docker/nginx/docker-compose.yml exec nginx nginx -s reload" >/dev/null 2>&1

(The command also includes the reload of the Nginx server we will install below)

Nginx

We use a Nginx Docker image to set up a single point Web server and expose our internal services (pi-hole admin, transmission…).

General configuration

We start by creating a docker-compose.yml file for Nginx

$ mkdir /opt/docker/nginx && cd /opt/docker/nginx

$ tee docker-compose.yml > /dev/null << "EOF"

version: "3"

services:

nginx:

image: nginx:mainline-alpine

ports:

- 80:80

- 443:443

restart: unless-stopped

volumes:

- ./conf:/etc/nginx:ro

- ./html:/usr/share/nginx/html:ro

- ./logs:/var/log/nginx

- /opt/docker/certbot/certs/live:/etc/letsencrypt/live:ro

- /opt/docker/certbot/certs/archive:/etc/letsencrypt/archive:ro

networks:

default:

external:

name: br0

EOF

We create the main Nginx configuration file

$ mkdir conf && cd conf

-- Download the official Nginx mime.types file

$ wget https://github.com/nginx/nginx/raw/master/conf/mime.types

-- Generate dhparam file

$ openssl dhparam -out dhparam.pem 2048

-- Create the main configuration file

$ tee nginx.conf > /dev/null <<"EOF"

worker_processes auto;

worker_rlimit_nofile 1024;

events {

multi_accept on;

worker_connections 1024;

}

http {

charset utf-8;

sendfile on;

tcp_nopush on;

tcp_nodelay on;

server_tokens off;

log_not_found off;

types_hash_max_size 2048;

client_max_body_size 25M;

# MIME

include mime.types;

default_type application/octet-stream;

# Custom logging with virtual host so we can use a single log file

log_format vcombined '$host:$server_port '

'$remote_addr - $remote_user [$time_local] '

'"$request" $status $body_bytes_sent '

'"$http_referer" "$http_user_agent"';

access_log /var/log/nginx/access.log vcombined;

error_log /var/log/nginx/error.log warn;

# SSL

ssl_session_timeout 1d;

ssl_session_cache shared:SSL:10m;

ssl_session_tickets off;

# Diffie-Hellman parameter for DHE ciphersuites

ssl_dhparam /etc/nginx/dhparam.pem;

# Mozilla Intermediate configuration

ssl_protocols TLSv1.2 TLSv1.3;

ssl_ciphers ECDHE-ECDSA-AES128-GCM-SHA256:ECDHE-RSA-AES128-GCM-SHA256:ECDHE-ECDSA-AES256-GCM-SHA384:ECDHE-RSA-AES256-GCM-SHA384:ECDHE-ECDSA-CHACHA20-POLY1305:ECDHE-RSA-CHACHA20-POLY1305:DHE-RSA-AES128-GCM-SHA256:DHE-RSA-AES256-GCM-SHA384;

# OCSP Stapling

ssl_stapling on;

ssl_stapling_verify on;

resolver 1.1.1.1 1.0.0.1 8.8.8.8 8.8.4.4 valid=60s;

resolver_timeout 2s;

# Sites configs

include /etc/nginx/sites-enabled/*;

}

EOF

Then we create some configuration files we will share between the sites configurations

$ tee general.conf > /dev/null << "EOF"

# favicon.ico

location = /favicon.ico {

log_not_found off;

access_log off;

}

# robots.txt

location = /robots.txt {

log_not_found off;

access_log off;

}

# assets, media

location ~* \.(?:css(\.map)?|js(\.map)?|jpe?g|png|gif|ico|cur|heic|webp|tiff?|mp3|m4a|aac|ogg|midi?|wav|mp4|mov|webm|mpe?g|avi|ogv|flv|wmv)$ {

expires 7d;

access_log off;

}

# svg, fonts

location ~* \.(?:svgz?|ttf|ttc|otf|eot|woff2?)$ {

add_header Access-Control-Allow-Origin "*";

expires 7d;

access_log off;

}

# gzip

gzip on;

gzip_vary on;

gzip_proxied any;

gzip_comp_level 6;

gzip_types text/plain text/css text/xml application/json application/javascript application/rss+xml application/atom+xml image/svg+xml;

EOF

$ tee proxy.conf > /dev/null << "EOF"

# HTTP 1.1 support

proxy_http_version 1.1;

proxy_buffering off;

proxy_set_header Host $http_host;

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection "";

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

# Mitigate httpoxy attack

proxy_set_header Proxy "";

EOF

$ tee security.conf > /dev/null << "EOF"

# security headers

add_header X-Frame-Options "SAMEORIGIN" always;

add_header X-XSS-Protection "1; mode=block" always;

add_header X-Content-Type-Options "nosniff" always;

add_header Referrer-Policy "no-referrer-when-downgrade" always;

add_header Content-Security-Policy "default-src 'self' http: https: data: blob: 'unsafe-inline'" always;

add_header Strict-Transport-Security "max-age=31536000; includeSubDomains; preload" always;

# . files

location ~ /\.(?!well-known) {

deny all;

}

EOF

Finally we create our sites configuration files

$ export SITE_DOMAIN=pisin.example.org

$ mkdir sites-enabled && cd sites-enabled

$ tee default.conf > /dev/null << EOF

# HTTP redirect

server {

listen 80;

listen [::]:80;

server_name _;

include general.conf;

include security.conf;

location / {

return 301 https://${SITE_DOMAIN}\$request_uri;

}

}

# HTTPS fallback

server {

listen 443 ssl http2;

listen [::]:443 ssl http2;

server_name _;

# SSL

ssl_certificate /etc/letsencrypt/live/${SITE_DOMAIN}/fullchain.pem;

ssl_certificate_key /etc/letsencrypt/live/${SITE_DOMAIN}/privkey.pem;

ssl_trusted_certificate /etc/letsencrypt/live/${SITE_DOMAIN}/chain.pem;

include general.conf;

include security.conf;

location / {

return 403;

}

}

EOF

$ tee ${SITE_DOMAIN}.conf > /dev/null << EOF

server {

listen 443 ssl http2;

listen [::]:443 ssl http2;

server_name ${SITE_DOMAIN};

root /usr/share/nginx/html;

# SSL

ssl_certificate /etc/letsencrypt/live/${SITE_DOMAIN}/fullchain.pem;

ssl_certificate_key /etc/letsencrypt/live/${SITE_DOMAIN}/privkey.pem;

ssl_trusted_certificate /etc/letsencrypt/live/${SITE_DOMAIN}/chain.pem;

include general.conf;

include security.conf;

# index.html fallback

location / {

autoindex on;

}

location ^~ /transmission/ {

proxy_pass http://transmission:9091/transmission/;

proxy_pass_header X-Transmission-Session-Id;

include proxy.conf;

}

location = /transmission/ {

return 302 https://\${host}\${request_uri}web/;

}

location ^~ /pihole/ {

rewrite /pihole/(.*) /admin/\$1 break;

proxy_pass http://pihole:80/;

include proxy.conf;

}

}

EOF

Now we can start our container with docker-compose up -d and we can access our server on https://pisin.example.org.

SSL Client certificate

If you want to secure access to your Nginx server, you can set up SSL client certificates. First, you need to follow this guide to create your CA and your client certificates.

Then you have to add a volume to docker-compose.yml

volumes:

[...]

- /root/ca/intermediate/certs/ca-chain.cert.pem:/etc/ssl/ca-chain.cert.pem:ro

Add the following lines to pisin.example.org.conf file

server {

[...]

# Client certificate validation

ssl_client_certificate /etc/ssl/ca-chain.cert.pem;

ssl_verify_client on;

[...]

}

Logs rotation

Nginx logs each request and the log files can grow quickly. We set up a log rotation to compress the old files and automatically remove the files older than 6 months.

$ sudo tee /etc/logrotate.d/docker-nginx > /dev/null << "EOF"

/opt/docker/nginx/logs/*.log {

monthly

maxsize 50M

missingok

rotate 6

compress

delaycompress

notifempty

sharedscripts

postrotate

docker-compose -f /opt/docker/nginx/docker-compose.yml exec nginx nginx -s reload

endscript

}

GoAccess logs Analyzer

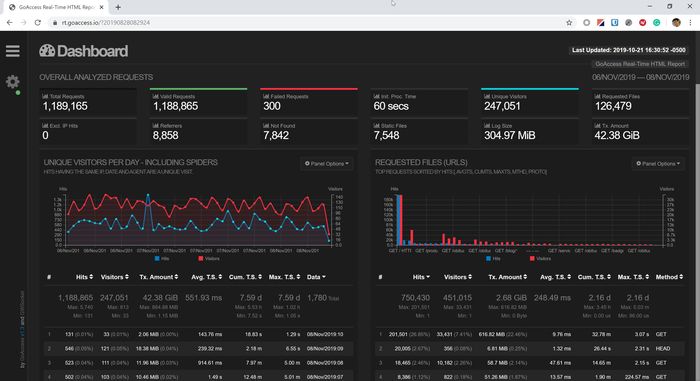

GoAccess is a real-time Web logs Analyzer. It helps to quickly view and analyze our web server statistics.

GoAccess installation and configuration

As usual, we create a Docker image compatible with the ARM architecture.

$ mkdir /opt/docker/goaccess && cd /opt/docker/goaccess

$ wget -qO- https://github.com/allinurl/goaccess/archive/master.tar.gz | tar xzvf -

Then we create the docker-compose.yml file to build and run GoAccess.

$ tee docker-compose.yml > /dev/null << "EOF"

version: "3"

services:

goaccess:

image: allinurl/goaccess:master

build:

context: ./goaccess-master

volumes:

- ./config:/srv/config

- /opt/docker/nginx/logs:/srv/logs:ro

- /opt/docker/nginx/html/goaccess:/srv/goaccess

command: ["--no-global-config", "--config-file=/srv/config/goaccess.conf"]

restart: unless-stopped

networks:

default:

external:

name: br0

EOF

And the configuration file goaccess.conf

$ mkdir config && cd config

$ wget https://raw.githubusercontent.com/allinurl/goaccess/master/config/goaccess.conf

The configuration file is quite long but at the end, only few options need to be changed

log-format VCOMBINED

html-prefs {"theme":"dark-gray","perPage":10}

real-time-html true

ws-url wss://pisin.example.org:443/goaccess/wss

log-file /srv/logs/access.log

output /srv/goaccess/real-time.html

Start GoAccess with docker-compose up -d and check the logs with docker-compose logs

Nginx configuration

Edit the site /opt/docker/nginx/conf/sites-enabled/pisin.example.org.conf and add the following

server {

[...]

location ^~ /goaccess/ {

add_header Content-Security-Policy "script-src 'self' 'unsafe-inline' 'unsafe-eval'; style-src 'self' 'unsafe-inline'" always;

}

location ^~ /goaccess/wss {

proxy_pass http://goaccess:7890/wss;

include proxy.conf;

proxy_set_header Connection "upgrade";

proxy_read_timeout 1h; # Avoid websocket disconnection

}

[...]

}

Reload Nginx configuration with docker-compose exec nginx nginx -s reload